Do technologies drive our values, or do our values drive our technologies?

“Officers take on immense responsibilities, again unlike anything in civilian life, for they have in their control the means of death and destruction.”

-Michael Walzer, Just and Unjust Wars

Do technologies drive our values, or do our values drive our technologies? As artificial intelligence and robotics become increasingly sophisticated and capable, this question is increasingly exigent. The recent United Nations Convention on Conventional Weapons (CCW) Group of Governmental Experts on lethal autonomous weapons systems (LAWS) served again to raise important questions about the morality of these systems. In the era of facial recognition and other advances in microelectronics and open-source software, current technologies could soon allow robots to kill independently of direct human control. So which comes first, technology or values? The answer is somewhere in the middle: our values must shape our development and use of advanced A.I. and robotics, but we must also recognize that the U.S. cannot be caught flat-footed by potential adversaries that develop and deploy these weapons systems.

Autonomous weapons systems are here to stay because they offer a number of important advantages over human-controlled systems. By 2020, worldwide spending on robotics and related services will reach $188 billion and robots promise to continue to streamline industrial production, disrupt even more sectors of the economy, and introduce entirely new services that don’t yet exist. The U.S., China, Russia, and Israel among others continue to explore autonomous systems, including autonomous driving technology, which could “navigate areas with a high number of IEDs [improvised explosive devices] or other hazards without risking human life.” The U.S. Army Training and Doctrine Command roadmaps the robotics and autonomous systems strategy detailing unmanned and manned intelligent teaming and completely autonomous convoy operations by 2025 with autonomous combined arms maneuver by 2035.

Relying on human minds to make all decisions involving the use of lethal force will almost certainly put combatants at a tactical disadvantage at some point. As one of the co-authors of this article, Michael Saxon, argued in Drones and Contemporary Conflict in 2015:

On a battlefield where at least one side has opted for full machine autonomy, those who introduce humans into their decision-making processes might find their machines hopelessly outmaneuvered and quickly destroyed, as will the people that they are intended to protect. In this sort of scenario, human agency, itself, at the point of conflict might become untenable. Our only real decision might be the decision to employ or not employ autonomous machines in the first place.

To be clear, this state of affairs is only an assumption, but it is likely a good one given technological progress, so the question becomes how the U.S. should respond to it. Some argue that the lack of legal and ethical constraint by potential adversaries compels the U.S. to develop and employ autonomous weapons. Thus, should the U.S. continue LAWS development, production, and use, or should it signal its unease with these weapons systems by exploring meaningful restrictions on their use without maintaining humans in the loop? Both perspectives have real merit, and West Point’s recently-created Robotics Research Center aims to capture not just the engineering component of robotics, but also the crucial value questions associated with these emerging technologies and their applications in war. In this way, the center contributes to West Point’s overall mission to create leaders prepared for the challenges of future battlefields.

Because of reasonable disagreements on the many sides of LAWS, the Robotics Research Center remains a multi- and inter-disciplinary effort. It is investigating not only robotics engineering, but also the implications for the development and use of robots across many of the academic disciplines represented at West Point, including philosophy, which carries the torch at West Point for education and research in the ethics of war. As the U.S. Department of Defense (DoD) remains committed to the body of laws and norms called Just War Theory and International Humanitarian Law (IHL), technological developments must continue to adhere to our commitments to discrimination and non-combatant immunity, proportionality, and must allow for those who employ force to be held responsible for violations of these commitments.

So, does the need for responsibility in Just War Theory require that humans remain “in the loop” for decisions involving potentially lethal force? This might be illuminated by imagining a human-in-the-loop LAWS system that satisfies all of the requirements of IHL. First, it is discriminate, in that it is capable of differentiating between combatants and non-combatants. Second, it adheres to the rule of non-combatant immunity. Third, it is proportionate, using morally appropriate levels of force. These must all be subject to the checks provided by the human mind in control. Aside from legitimate questions about the ease with which a system might allow the nation to go to war unnecessarily, this sort of machine seems ideal.

In a future scenario, this same machine (or machine swarms, as is likely), when confronted with a human-out-of-the-loop LAWS system, becomes hopelessly outmatched, as the human-in-the-loop cannot possibly keep up with the speed of the microprocessor. The human-controlled machine is overwhelmed and destroyed. Over time, autonomous LAWS prevail on the battlefield and overwhelm their human opponents, killing them as well.

When an autonomous system kills, who is responsible for the killing, justified or not?

It is at this point that we rightly pause. Machines thus empowered are now making decisions, independently of humans, about killing humans. Even if they make these decisions well—that is, discriminately and proportionately—should we be comfortable with this state of affairs, or has something gone terribly wrong? Our moral intuitions suggest that something has gone wrong when moral decision-making about killing involves nothing more than a target list and an on-off switch. Killing of this sort seems perhaps too easy, with humans too far away from the important decisions about killing, stripping those targeted of their human dignity. Furthermore, when an autonomous system kills, who is responsible for the killing, justified or not? In current wars, the chain of responsibility and Joint Doctrine for Targeting is fairly straightforward, with soldiers and officers remaining at the center of questions of responsibility. Many argue that responsibility and liability cannot be assessed with the deployment of LAWS where humans are placed out of the loop.

That said, all weapons systems (manned, unmanned, and autonomous) go through an extensive validation, verification, test, and evaluation process. LAWS are no different, as outlined in DOD Directive 3000.09. Commanders still authorize the targeting of legitimate combatants and that authorization should be made within the bounds established by the Rules of Engagement and International Humanitarian Law. In all cases, the commander is accountable for his or her decisions to kill. LAWS confuse this, however, as the decision to “kill” is potentially farther removed from the decision to “kill this or that combatant target,” when the machines make those decisions on their own.

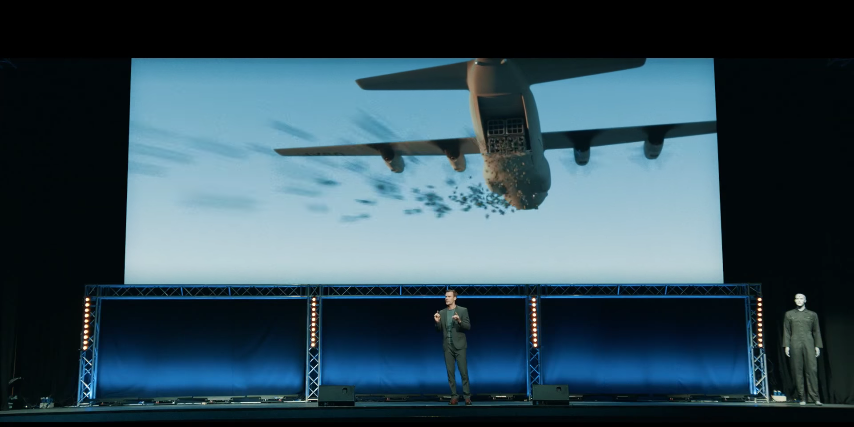

Much of the debate on LAWS focuses on ratifying and enforcing an international ban through the United Nations in a similar manner that occurred for bans on blinding lasers and cluster munitions. One might argue that these sorts of bans are unenforceable and these arguments clearly have merit. After all, most technologies are dual-use and are developed in other contexts for non-military purposes like smart phones, commercial drones, and self-driving cars. When militarized, these same technologies might resemble something like those in the recently released video titled Slaughterbots by advocates who urge a LAWS ban, though the “slaughterbots” are most menacing when they are employed by shadowy groups to kill non-combatants in mass. The video is startling in its ability to invoke fear, but the advocates for the ban fail to show how equally sophisticated defensive technologies will emerge. As an argument, the video entertains, but falls flat for several reasons.

Ultimately, what distinguishes the “slaughterbots” from other applications are the shaped explosive charge and the willingness to integrate the various existing technologies to kill human beings. It is hard to imagine how one can effectively ban the integration of existing computer programming (facial recognition and other A.I. technologies), straightforward and well-known explosives design (shaped charges), and other off-the-shelf technologies (nano quadcopters). Even with commitments to keeping humans in the loop, these technologies are so embedded in emerging military and civilian research and development that worthwhile regulation seems implausible, as the line between the two are nearly indistinguishable.

Recognizing our discomfort with them, how can the U.S. military resolve the dilemma presented by lethal autonomous weapons systems? Michael Walzer’s account of Just War Theory—itself long studied at the service academies and elsewhere in the DoD—provides one promising approach to the moral reasoning required to do so. Walzer develops a proposition that he calls “supreme emergency.” In a supreme emergency, the “gloves come off,” so to speak, when a rights-adhering nation is about to be destroyed by a murderous regime. His example of supreme emergency is the British decision to bomb German cities at the beginning of World War II, targeting German non-combatants, which he determines is permissible. The test for permissibility is both exigency as well as the nature of the threat. In his example, Great Britain, a rights-respecting nation, was close to being defeated by Nazi Germany and had no other viable military option but to bomb German cities. Great Britain effectively violated rights now for the sake of rights for all time, as a Nazi victory would have guaranteed slaughter. Walzer’s approach has critics, who argue that supreme emergency reflects a move toward either utilitarianism or realism. The sort of moral reasoning at the center of the supreme emergency concept would apply to the use of lethal autonomous systems in the case of a nation facing imminent defeat by a rights-violating regime and its humans-out-of-the-loop LAWS. In such circumstances, a nation may be justified in removing humans from the loop. This moment, however, requires developing the weapons, training with them, and experimenting with methods of operational employment that allow the defeat of an enemy who has chosen to use fully autonomous forces, prior to our reluctant decision to employ them.

Even though fully autonomous LAWS must still adhere to our commitments to discrimination and proportionality, removing humans from the loop clearly signals a departure from what we should consider to be very important norms, given that full autonomy potentially undermines our traditional notions of agency, responsibility, and human dignity, and we should seek to mitigate that damage where we can. We must remain reluctant to make the choice for full autonomy and only make it in the direst of circumstances, but, ultimately, any rights-adhering nation that faces annihilation by a rights-violating, autocratic regime must be prepared to dirty its hands. If the mind faces defeat by the microprocessor, one must be prepared to flip the switch, removing humans from the loop.

LTC Christopher Korpela, Ph.D., is the Director of the Robotics Research Center at West Point. Michael Saxon, Ph.D., USA (Ret.) is Ethics and Humanities Research Fellow, Robotics Research Center at West Point, and the President of Storm King Group, LLC. The views expressed are those of the authors and not necessarily those of the Department of the Army, Joint Staff, Department of Defense, or any agency of the U.S. government.

Photo: Image from the video Slaughterbots by the Future of Life Institute, 2017.

Photo Credit: Stuart Russell/The Future of Life Institute, with permission.